After 16 months of using and testing APFS—Apple’s new file system—I’ve come to the conclusion that you probably don’t want to use it on HDDs (disks with rotating platters).

Why? Well, to understand why APFS and HDDs are not well suited, I first need to explain one of the key features of APFS: “copy on write”. “Copy on write” is the magic behind the snapshot feature in APFS and also allows you to copy really large files in in only a couple of seconds. However, to fully understand the “copy on write” process, and the implications of using APFS with HDDs, it helps first to know how copying works with HFS Extended volumes…

Copying a file on an HFS Extended volume

HFS Extended is the file system Apple has been using for almost 30 years, the one which all Macs running macOS 10.12 or earlier use for their startup volumes.

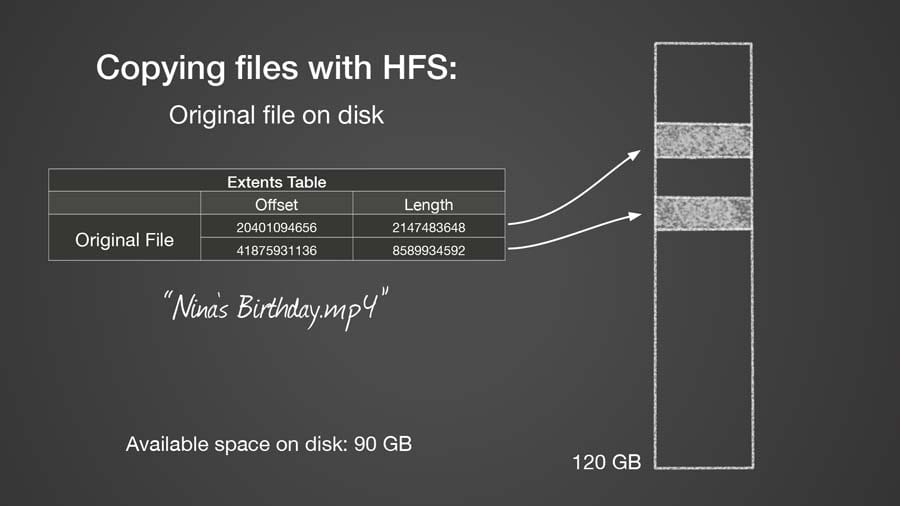

For my example, I am using a 10 GB movie file, “Nina’s Birthday.mp4”, which is stored in two separate blocks of data on the volume. When I play this movie file on my computer, my Mac will first read the first block and then go straight on to read the second block; it seamlessly moves from one block to the next so that, to the viewer, the movie appears as if it was a single block of data. Files on your Mac can exist in one or many blocks. Small files usually exist in one block whereas larger files are often broken up into 2 or more blocks so they can fit into the available free space in a volume.

Also Read: The Speed of APFS: Just How Fast Is It?, How to Revert a drive from APFS back to HFS+

Unlike SSDs, HDDs are mechanical devices with spinning disks (aka platters) containing your volume’s data, and heads that move over the disk in order to read that data. When a HDDs has to go to a new part of a disk, there is a delay while the head moves to the new location and waits for the correct part of the disk platter to be under the head so it can start reading. This delay is usually 4–10 msec (1/250–1/100 of a second). You probably won’t notice a delay when reading a file which is in 2 or 3 blocks, but reading a file which is made up of 1,000 or 10,000 blocks could be painfully slow.

Each of the one or more blocks that make up a single file is called an extent. The file system maintains a table of these extents (one per file) called an extents table. The extents table records the location of every block in the file (the offset) and the length of that block (length). In this way, the computer knows where to go on the disk and how much data to read from that location. For every block of data in a file there is an offset and a length, which together make up a single extent in the extents table. This is the important thing to remember when you go on to read about how APFS deals with files. The “Nina’s Birthday.mp4” file in my example has two extents, the first of which is 2 GB in length and the second of which is 8 GB.

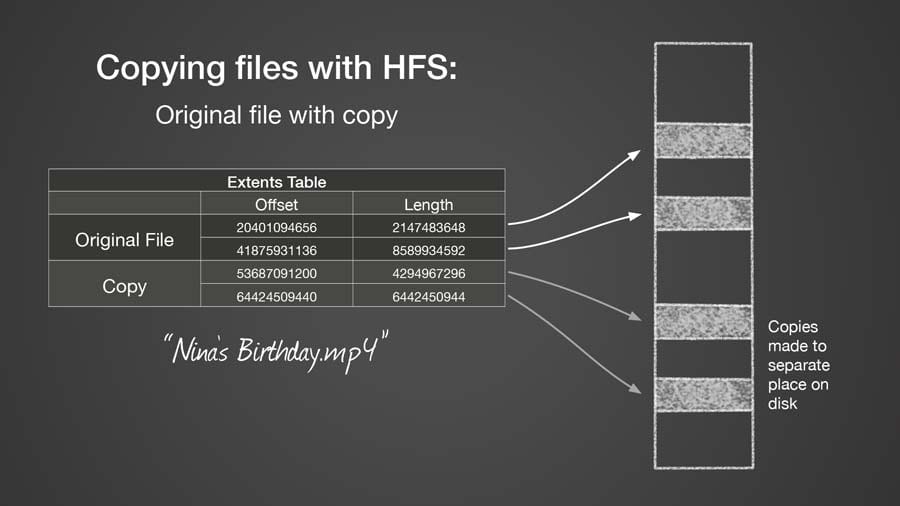

So let’s say I need to make a copy of this file. When I copy the file on my HFS Extended volume, my Mac reads the file’s data, locates a free space in the volume for the copy, and then writes the copied data out. If it can, the Mac will write the new file out as a single block. However, in my example, the volume doesn’t contain a single block of space that is 10 GB in size so it has to write out the file as 2 blocks: the first 4 GB in length and the second 6 GB. Both the original file and the copy can be read relatively quickly because each has only 2 blocks, thus 2 extents.

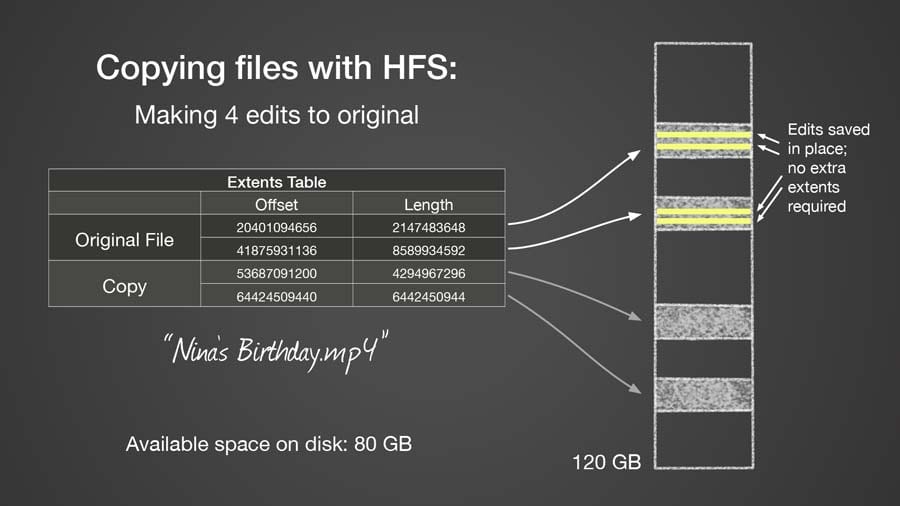

If I now edit the original movie and add four edits (say transitions between different scenes), when I save the changes, they will be written out over the existing data for this file. Even after the edits, my movie file will still contain only 2 extents and can be read relatively quickly.

Copying a file on an APFS volume

For my example with an APFS volume, I will start with the same movie file, “Nina’s Birthday.mp4,” which is made up of 2 extents, the first 2 GB in length and the second 8 GB.

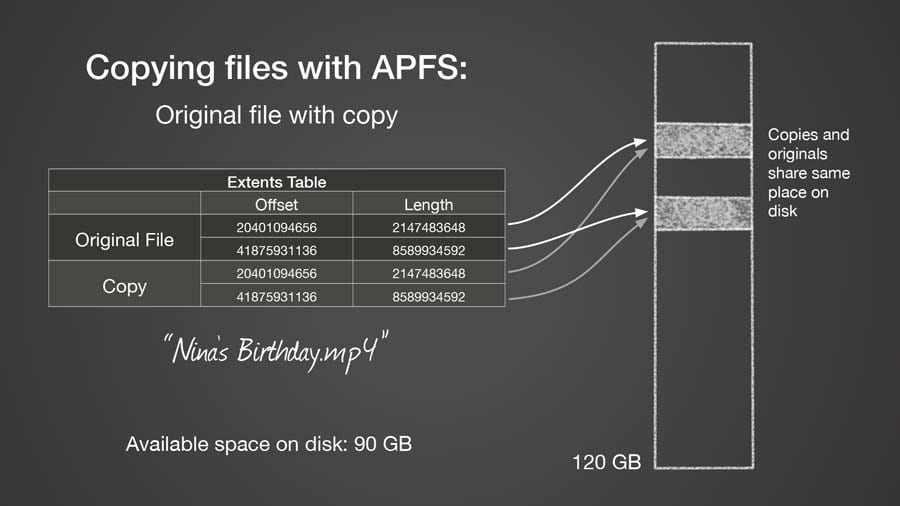

When I copy this file on an APFS volume, the file data doesn’t actually get copied to a new location on the disk. Instead, the file system knows that both the original and the copy contain the exact same data, so both the original file and its copy point to (reference) the same data. They may look like separate files in the Finder but, under the hood, both filenames point to the same place on the disk. And although the original and the copy each has its own extents table, the extents tables are identical.

This is the magic of copy on write and the reason copying a 100 GB file only takes a few seconds: no data is actually being copied! The file system is just creating a new extents table for the copy (there may be other information it needs to keep track of for the new file, but that’s not important in this example).

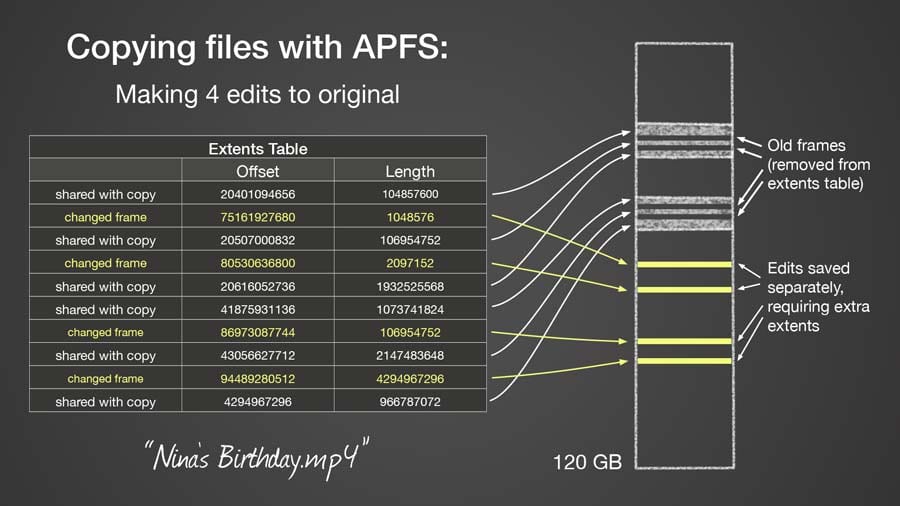

I mentioned above that with APFS, an original file and its copy will have identical extents tables. However, this is true only until you make a change to one of them. When I go to create the same 4 transitions in my movie that I created when using my HFS Extended volume, APFS has to find new, unused, space on the disk to store these edits. It can’t write the edits over the original file, like the HFS Extended volume does, because then the changes would exist in both the original file and its copy—remember that the extents table for the file and its copy point to the same location on the disk. So that would be really bad.

Instead, APFS creates a new extent for each of the edits. It also has to create a new extent for the remaining data after the transition, the part of the movie which comes after the transition and which is still the same in both the original movie and its copy. Therefore, for each non-contiguous write, the file system has to create 2 new extents, one for the changed data and one for the original data (common to the original file and its copy), which follows the new data. If this sounds complicated it’s because it is—requiring multiple back-and-forths between the locations of the original file and the files with all the changes. Each back-and-forth is recorded as a new extent.

After writing out my 4 transitions, my original movie file now has 10 extents. This might not seem like a lot of extents but that’s for only 4 edits! Editing an entire movie, or even just retouching a photo could result in thousands of extents. Imagine what happens with the file used to store your mail messages when you are getting hundreds or thousands of messages a week. And if you are someone who uses Parallels or VM Ware Fusion, each time you start up your virtual machine it probably results in 100,000 writes. You can see that any of these types of files could easily get many thousand extents.

Now imagine what will happen when your Mac goes to read a file with a thousand or more extents on an HDD. As the file system reaches the end of one extent and starts reading from the next one, it has to wait the 4–10 msec for the disk’s platter and head to get aligned correctly to begin reading the next extent. Multiply this delay by 1,000 or more and the time taken to read these files could become unbearably long.

This long delay when reading large files is the reason I don’t recommend using APFS on HDDs. This delay will only occur with files which have been written to a lot, and if the file has been copied or the volume has a snapshot. But who wants to use a volume where you have to remember not to copy files or use Time Machine?

I think Apple is aware of this problem as they tell you not to automatically convert startup volumes on HDDs to APFS when upgrading to High Sierra. In addition, when erasing a disk, the Disk Utility application only chooses APFS as the default file system if it can confirm that the disk is an SSD.

The proof: I knew from the start that this was how copy on write was supposed to work, but just to be sure, I wanted to see what was actually going on at the disk level. Since I am the developer for SoftRAID, I can use the SoftRAID driver to allow me to watch what is actually going on.

I created a special version of the SoftRAID driver, which allowed me to record where on a disk the file system was reading and writing data and how much data was transferred each time. I then edited a file on both HFS Extended and APFS volumes.

With a file on an HFS Extended volume, I could see the original data being overwritten in the same location. I saw this same behavior with a file on an APFS volume as long as the file had not been copied or a snapshot did not exist for this volume. As soon as I copied the file or created a snapshot of the volume, all writes were made to new locations on the volume, locations that were not part of the original file.

An edge case is Time Machine backups, and I wonder if APFS on hdd might actually run faster than HPF+ on hdd due to reasoning at below link (excerpt from Barney-15E post below)…

https://discussions.apple.com/thread/254683529?answerId=258798672022#258798672022

Mar 15, 2023 9:49 AM in response to binaryme

The performance problems with of APFS in spinning hard disk drives is pretty much irrelevant for Time Machine backups. The big performance problem is the extent file tree when files are opened edited and rewritten to disk. Time machine does not re-open the files, edit them and rewrite them to disk. It writes once. So, an HDD with APFS for time machine will work just fine. But, the cost of SSD’s are reaching parity with HDD.

Great article… very helpful. But it leaves me (and I suspect others) with a dilemma. Here’s my situation:

I have a lot of SSD storage on my MacBook Pro (8TB internal SSD + 32TB external OWC Thunderblade)

I need to back up all 40TB to something using Time Machine

I bought a 64TB OWC Mercury Elite Pro Quad RAID thinking it’d be the perfect Time Machine disk for the task at hand

Because I run Monterey (12.4) on my Mac, Time Machine will only backup to APFS drives (not HFS+)

SoftRaid XT (and your article) cautions against formatting the 64TB Mercury Elite RAID as an APFS volume, saying performance may severely degrade over time

Allowing SoftRaid to format the 64TB Mercury Elite Pro RAID as an HFS+ volume works fine until you designate it in Time Machine as the target disk, at which point Time Machine says it’s “Preparing the disk for Time Machine,” immediately shows the progress bar at 50% and then never makes any further progress and never throws an error, thus forcing a forced quit of System Preferences (during a potentially delicate operation)

So, what’s a fellow who needs to back up 40TB of disk to do? Should I format the Mercury Elite Pro RAID as APFS so it works with Time Machine, but take the risk of performance degradation over time? Or do I need to return the Mercury Elite Pro RAID and find a different solution? Or do I need to find a different backup solution than Time Machine (that would be very painful)?

How did you solve it now? I have a 500G HDD and I am considering whether to choose apfs or hfs+. I need the advantages of apfs (snapshots and copies will not be added repeatedly). By the way, you can use hfs+ to backup time machine on macOS 12 Monterey by some special means. thanks.

You do not have to use APFS for TimeMachine on Big Sur or Monterey. It’s enough to put the following folders on the drive after formatting it with HFS+ (Mac OS Extended Journaled) and before adding it to TimeMachine. If these folders are present by the time you add the drive to TimeMachine, it willl not be reformatted with APFS:

Backups.backupdb

|__ SomeMachineName

|__ 2022-07-30-060606

It’s best to create this three nested directories/folders on your desktop first and copy it to your HFS+ TimeMachine drive in one go, because if you create a folder called Backups.backupdb on the root level of a drive, your Mac won’t let you copy anything to or create in that folder.

These three folders are all it takes to convince macOS that your drive is a valid TimeMachine volume and it will not reformat it with APFS when you add it to TimeMachine on BigSur or Monterey.

This is all v interesting and much appreciated.

I am planning on moving my Lightroom photos (not the db or the previews or recent photos which will be on an SSD) onto one of your lovely (2nd hand) Thunderbay 4 (TB2) enclosures which will be striped to either RAID 0 (most likely) or RAID 5.

Given that LR is a non-destructive editor (i.e no changes to the photos themselves) would there be a case for formatting such HDD volumes in APFS? It is also worth noting that LR gives you the option of saving changes to XMP sidecart files as well as the database which in such a case may be less desirable.

Also…

My CCC backups of those same files. Given that the files themselves are unlikely to change much (folder location more than anything else, and even that would be rare) is there a case for making my backups HDD’s AFPS?

I am attracted by the idea of Snapshots, which I can presumably prune as I do CCC SafetyNet folders.

Thanks and regards,

Have you collected any new data on this since your 2017 article?

I’m skeptical, as I’ve used APFS on all my drives (SSD or HDD) and have had no trouble with them. Including running VMs on APFS volumes.

Email messages are usually stored in separate files, .eml or .emlx, and so wouldn’t require copy on write functionality.

See ~/Library/Mail/V8

Your article also fails to mention the extra writes required when using the HFS+ Journaled filesystem. Maybe you’re advocating using HFS+ non-journaled?

Disk fragmentation, as you’re describing in this post, happens on all file systems that I know of, so it isn’t particular to APFS.

Finally, snapshots shouldn’t live forever, unless perhaps when created manually. Carbon Copy Cloner, TimeMachine, etc. release snapshots when they are finished backing up the data.

Hi Tim,

Has APFS developed enough to mitigate these concerns? I have a new external HDD that I will use for regular backups with Time Machine (and occasional large file storage on a separate partition), and am wondering whether to format it as HFS+ or APFS. Some of the files I backup will be audio files that I edit repeatedly – is APFS more likely to degrade these files over time than HFS+ would? My HDD manufacturer says “While you can format your hard disk drive (HDD) in APFS, performance may be degraded when files on the drive are routinely subjected to extensive editing (for example, large-scale image, video, audio, and music editing).”

Thanks much!

Unfortunately, Apple has refused to fix this problem, so any edited files on an APFS volume which uses HDDs will get slower each time they are modified. There is no fix in sight.

The additional problem is that, as of macOS 11, Apple now requires that Time Machine volumes be formatted with APFS.

My recommendation is to use HFS+ on all volumes which contain HDDs. I would also continue to use Time Machine volumes using APFS on HDDs until the performance gets to be unacceptably slow. At that point, I would look for an alternate backup software.

I wish I had a more positive update to give you.

It looks like Apple says that they still support HFS+ for use with Time machine:

https://support.apple.com/guide/mac-help/types-of-disks-you-can-use-with-time-machine-mh15139/12.0/mac/12.0

Thank you for pointing this out to me. Our main SoftRAID support person, Mark, is the one who told me APFS was required. I will ask him to test this and post a reply if I find anything different than what is on Apple’s support page.

Hey Tim,

Based on recent comments, it appears your position on APFS and HDD hasn’t changed. I’m surprised APFS hasn’t evolved more HDD-friendly the past few years. I’m assuming this applies to HDD RAID configs? I was looking for formatting guidance wrt Mercury Elite Pro Dual mini in RAID-1 for Time Machine backups – HFS vs APFS, encrypted vs unencrypted? I’ve a thread on the Apple Community forum here as well: https://discussions.apple.com/thread/253009869

Thanks,

Mark

Hi Tim,

Thanks for this explanation, unfortunately, even with some IT background, I don’t think I’ve grasped the full concept of it…

My issue is:

As a video editor, a few months ago I’ve converted all my HDD to APFS (especially for this snapshot thing, which help me to save a lot of space with FCPX since my media are both OUT and IN the library for commodities… But I’m mostly working on and external SSD anyway.

But now I’m quite concerned by your article…

I’ve reconverted my Backup drive back in HFS extended, and this got my work stuck for more than 2 days copying…

Now my Data storage is in APFS and my Backup is in HFS and I’m in the middle of some important projects, I can’t wait 2 other days copying for now…

My question is, is there a risk of data loss or hardware failure using AFPS on HDD in a relatively short term?

I’m thinking to swap my data and data drives after my next backup session, so it will be less demanding for the APFS HDD, until I have the time to reformat it as well.

Thanks a lot !

I don’t think you will be in any greater danger of data loss by using APFS on your HDDs. Is some of our tests, APFS volumes have proved to be much less susceptible to volume corruption than HFS ones.

I would expect that you might see slower read and write performance on this volume than you would if it were formatted as HFS. This is especially true for files you have either copied with the Finder or if you have created an APFS snapshot of the volume. Either operation will invoke the copy-on-write mechanism and all subsequent writes to the file (or volume in the case of a snapshot) will result in adding a large number of fragments to the file.

The worst case of this type of slowdown I heard of was a user who had VM (Virtual Machine) images on their HDD based APFS volume – I can’t remember whether they were using Parallels Desktop or VMWare Fusion. After a week or two of use, the guest operating system would become so slow that it was painful. The user would then copy the VM image to another volume and then back to the the APFS volume. Once they performed the copy, the speed of their VM was restored. I think they got into the habit of doing this copy operation once a week.

I keep hoping that Apple will allow us to disable the copy-on-write feature in APFS on a volume by volume basis. So far no luck on this however. I have found that copying files with the cp command in Terminal does not invoke copy on write. I believe this is because the cp command is part of the Posix specification and specifies two independent copies of the data on the underlying storage media.

Tim

Amazing, thank you so much.

I think I’ll try to revert back to HFS ASAP even if it means to find a way to improve my file management system…

But for now, the copy will indeed take much more space in HFS than APFS, is there a way or app to show all the copy-on-write media as a list of some sort ?

Hi Tim.

Wondering if you can help.

I tried to update my Mac to Big Sur, it wanted to use space on my External Hard drive. Long story short the update didn’t take but now my hard drive is split.

The Container Disk3 has all my 2TB of data on it but I can’t not access it. It won’t mount, it comes up with Disk Management error.

Is there a way to get the files off this drive?

Sorry it’s vague, I’m not that tech savvy.

Regards

Carl

Thanks for your sharing.

Hi, Tim. I really appreciate your detailed article which helps me a lot in choosing the file system for my new HDD. It seems I had better use HFS+ for my storage disk. Thanks a lot!

What is your take on the fact that Microsoft recommends using APFS to sync OneDrive on an external drive?

I don’t know enough about how Microsoft is implementing their sync operation to be able to make an intelligent comment. Rather than pretending to know, I will leave it to others to weigh in on this.

Very nice and detailed article that finally made me realize why AFPS is a bad idea for HDD. The main reason that I want to change my HDD to AFPS is because of the smart way container and volumes are handled when I add or delete partitions. In the past, I have lost data because the partitioning process have failed mid-way, or other things just didn’t work. With large drives, it just takes forever to make partitions as well. Also, the fact that I have to move a lot of big files to another drive to delete all partitions again also is irritating. Is there any way to add/delete/resize partitions on HDD in the way AFPS does this without it making all those extents when editing files? It would be amazing if there is a way to disable the functions in AFPS that don’t work well with HDD through Terminal or something, and use it to partition HDD in a much more intuitive way than HFS+ does.

Hi Tim,

I am using an iMacPro with 10.15.5 installed. Can I clone it to a HFS+ formatted mechanical drive using Carbon Copy Cloner, in order to have a bootable backup?

Thank you.

Max

Using an HFS+ startup volume is not supported in macOS 10.15. It might work but will probably fail at some point during an update, reboot or some other operation. The installer, update mechanism, virtual memory and boot system all now rely on file system features which are in APFS and are not supported in HFS+.

You can try it, but don’t expect it to work.

Tim,

This was an extremely helpful article. Thank you for taking the time to test and write your examples.

I’m considering using APFS for an external HDD because this same 2-month old HDD had some apparent data corruption and I can’t read files from it anymore – even though the files show in Finder just fine. Now it seems I’m forced to erase the disk and reformat it. I was using HFS+ (MacOS Extended Journaled) before, and have been using the same format on previous external HDDs.

I’m considering APFS for it’s reliability of data corruption, but my question is: Does the reliability in corruption/errors only apply to SSDs still, or will I see this enhancement for HDD as well?

Some back story/info: This external drive I have is only used to copy/write large (20-30GB) movies to. It’s used for a Plex server. So I only ever read data from it to stream movies off of. No editing to the actual files/movies (besides renaming files and folders at most) will apply.

Please advise. Your help is greatly appreciated!

APFS only protects the file system information from data corruption (e.g. the file name, size, modification date, etc.). It offers no protection to the actual file data itself.

This only means that an APFS volume is less likely to become unmountable. It does not mean that your file is less likely to be corrupted.

If your disk is in a USB enclosure (USB 3 or USB-C, formally known as USB 3.1 Gen 2), then the I would switch to a Thunderbolt enclosure. We routinely see more problems with USB enclosures.

Your mention that USB enclosures have many more problems than Thunderbolt has me concerned. I recently purchased a pair of OWC Mercury Elite Pro Quad RAID enclosures to use for storage of photos and other data. I chose them over the Thunderbolt 3 equivalent as they were substantially less expensive. That being said, I would happily spend more money if I knew I was going to be avoiding data corruption and loss in the future. Is this something I should be concerned about? Should I switch from USB-C Mercury Elite Pro Quad to Thunderbolt 3 ThunderBay 4 RAID? Thanks for your assistance!

I would switch from USB to Thunderbolt if it were me (and both of my personal enclosures are ThunderBay 4s). I know they are more expense but they are definitely worth the money. There is a certain type of volume corruption which we occasionally see, but we only ever see it on USB enclosures. I don’t believe this data corruption is limited to SoftRAID volumes.

I have never felt like USB storage software is a priority at Apple. We have reported numerous bugs to them and most of the time we get back a response which indicates that they are not willing to address the problem.

Tim

Hi,

Is this corruption associated only with internal drives that are being housed in external USB 3 enclosures or does it also happen with pre-assembled external USB 3.0 hard drives? Do you know what specifically is causing it?

I just bought a couple of identical 14 TB USB 3.0 external mechanical hard drives to back up all my old files from 1996-present and store my new files (and possibly my main user account), and I don’t want all that stuff to get corrupted. I didn’t want to get thunderbolt because (as we all know) they’re more expensive, but also because the drives can’t pass more than 6Gbps anyway without RAID so it seemed kind of pointless.

But yeah I don’t want my stuff to get corrupted…

Only you know how important your data is to you. If it were me, I would Thunderbolt storage for at least one of your backups. In our office, we have 4 copies of all important files. The onsite backups are all on Thunderbolt storage (RAID 1+0) and the offsite ones are on USB-C. I don’t use USB 3.0 for single drives or RAID volumes and don’t use USB-C for RAID volumes. USB-C seems to be okay when only a single disk is involved.

This is just based on my experience. Your experience might be different.

Tim

Is it ok to use a “toaster”-style hard drive reader as a permanent external drive for backups and storage?

Such as this one:

https://eshop.macsales.com/shop/external-drives/owc-drive-dock

Hi, installing an SSD (kit from OWC) in Mac Mini late 2014 and using old spinner in an external HDD housing USB3 – it has two MACOS journaled portions and the boot partition AFPS – and it was getting crazy slow, after reading here know why..Running Mojave. Any ideas on how best to format and partition new 1TB SSD to migrate the three volumes (from CCCloner) OR just migrate from [now external HDD USB 3] HDD into new SSD? Best to format SSD with the three partitions APFS? or 1 APFS and 2 MACOS Journaled. After this is all completed will be upgrading to Catalina and will keep old external HDD with some precious 32bit applications and as a spare boot. Thanks

Russ

I would just create one APFS volume, in Disk Utility on the SSD. Then do a clean install of Catalina on it. You can then use Migration Assistant (“/Applications/Utilities/Migration Assistant”) to copy all of your documents, applications and settings over to your Catalina startup volume. Try it out for a couple of days and make sure everything works. If it doesn’t, you can go back to Mojave on the same SSD.

APFS is much smarter about how more than one volume can share the space on a single disk (or SSD). Once you create the first one, APFS will create a container to hold it. Then you can just go ahead and add more volumes to the same container and they all use different parts of the same container. It works really well on SSDs, less so on HDDs.

Tim

Here is an easy work-around. Why not just partition your HDD, install your OS and applications on the first APFS partition, and all your files on a second partition which is HFS+? Especially since we must now take extra steps to install Mojave and later on HFS! Then you really only need to back up your second partition to an external drive occasionally, which is much quicker than backing up all your apps and OS. Besides, in the less likely event of having the main APFS partition becoming corrupt, you will at least be able to re-install all your apps and OS again after.

This seems, at first, like it should work. The problem is that the OS creates an APFS snapshot whenever you update system software. After a snapshot is made of the startup volume, all subsequent writes to existing files will result in fragmentation. If you have TimeMachine turned on for your startup volume, or use a backup utility like Carbon Copy Cloner, which also uses APFS snapshots, you will also see greatly increased file fragmentation. All these fragmented files will result in a much slower Mac.

While file fragmentation has almost no effect on the performance of SSDs, it has a huge effect on HDDs.

I believe this fragmentation is the reason users are starting to encounter problems with their APFS Fusion volumes. (A Fusion volume combines a smaller SSD with a larger HDD to create one hybrid volume which uses both devices.) I have heard numerous reports at conferences of users having their Mac becoming unusably slow after converting their startup volume from an HFS Fusion volume to an APFS Fusion volume.

I’m a pro photographer. I do thousands of edits monthly. Or weekly sometimes – if you count each move to adjust or retouch a photo or video.

Working on a 2013 model Mac Pro currently using sierra OS. I’ve been considering updating to Mojave as time goes by it’s going to be harder and harder to use the newest versions of the software‘s I’d like to use.

Currently using a 2 SSD drive raid zero as my “work drive“ where I upload new jobs, edit and process, which is backed up nightly to a single HDD. And when each job is complete then I move them off the work drive to a single 10 TB archive drive.

And of course I have nightly back ups of my start up, and User volume, on HDDS.

The internal SSD is my start up volume with applications and system only. I keep my user volume on a separate drive because the internal is only 500 GB.

If what you’re saying is correct, this could cause me huge problems going forward if I update my internal start up volume and work SSD drives to APFS.

What do you suggest I do going forward? I can’t afford to have all my drives the SS D’s. And I’m not eager to spend $6000 on a new Mac Pro.

The drastic performance decrease caused by copy on write is only seen with HDDs. Since SSDs have no mechanical parts, they can read and write from non-contiguous parts of a volume without slowing down. An HDD must wait for the head mechanism to swing to a new track when read and writes are non-contiguous, a process that can take up to 1/100th of a second.

My recommendation is to move your startup volume and your work SSDs to APFS volumes. APFS offers much better protection from volume corruption than HFS+. I am totally in favor of it for all applications except volumes on HDDs.

Keep in mind, that SoftRAID is the only RAID software which implements TRIM on RAID volumes with SSDs. AppleRAID ignores all TRIM commands. Having your RAID solution pass TRIM commands to the SSDs can significantly improve performance (10-15%) in SSDs which have been under heavy use and are close to being full.

Mojave’s diskutil now has a “defragment” capability:

https://developer.apple.com/support/downloads/Apple-File-System-Reference.pdf

sudo diskutil apfs defragment /Volumes/miniStack enable

I found this article after formatting a large HDD with APFS, and have been wondering how a COW file system performs on an HDD with the new defragment flag enabled.

In the limited tests and experience I have with APFS on an HDD, I have not observed measurable performance hits, so I’ve deferred the pain of reverting an APFS-formatted drive to HFS.

I tested the defragment option in diskutil extensively with macOS 10.14, Mojave and was unable to create a situation where I saw a measurable difference after running the defragment option with APFS volumes on HDDs. I don’t believe this has changed with macOS 10.15, Catalina, but I will put it on my list of things to test this coming month.

What about using an APFS-formatted disk image on an HFS Extended mechanical drive? Say, a large image (many Gigabytes) used to backup the startup volume.

A disk image can only partially insulate the underlying drive from disk activity. For example, if the underlying drive fails after a billion writes, one cannot expect it to survive a trillion writes via a disk image.

Does an APFS disk image cause the same degradation in performance of the underlying drive, after many copy-on-writes?

For example, Sparse Bundle disk images are backed on the underlying drive using 8 MB bands, each of which are presumably allocated as contiguous storage. This perhaps has some “muting” effect: A sequence of small copy-on-writes could end up on the same band, where they might have ended up all over the place on an APFS-formatted mechanical drive.

We don’t have access to Apple’s source code, but you might have an idea about this?

I have not tested the effect of using a disk image of an APFS volume stored on an HFS+ volume. I would expect that you would see similar degradation in performance if you mounted the volumes in the APFS disk image and then started reading and writing to them.

Remember that the OS makes a snapshot of your startup volume before every system software update. If you created or updated your APFS disk image while this snapshot still existed, you would incur all the performance penalties which copy on write causes.

Hello! Are those information valid to the recent Mac Os updates? I’m asking that because external HDD’s can be formated in APFS.

I would like to know as well as this article is over a year old and possibly outdated…

This is not a bug or fault. It is how Copy-on-Write is designed to work. COW is part of how APFS operates. It will never change and can’t any more then a dog can be a cat with any type update. this article will be valid till the end of the world in regards to it use on HDD’s.

I have tested this with the release version of macOS 10.15, Catalina, and find that the performance degradation is identical to earlier releases of Mac OS when using APFS on HDDs.

absolutely the best post ever about mac file system. Great job!

Thank you for this very clear and detailed explanations. This along with the article about “speed” of APFS vs. HFS are what Apple should be writing. So more Kudos to you. Mike Bombich of Carbon Copy Cloner has produced similar quality and clear information. Many, many thanks!!

May I offer a bit of history?

This BOLD! NEW! APFS approach was invented about 40 years ago at the Computer Automation (now long deceased) Austin Development Center.

The original goal of this approach was to minimize disk space usage for Large databases that were often modified (in the early 80s, a 300Mb CDC Storage Module Drive was HUGE – both in storage capacity and in physical size…)

This “APFS” approach was originally called “Purple Arrows” because in the ADC staff meeting where the Filesystem guys explained it to the entire project staff (I was the compiler guy) they used black markers on our whiteboard to show the original file layout on disk, and then purple markers to show how changes & updates would be handled. [Look at the “Copying files with APFS” diagram above, and mentally translate the yellow arrows in it into Purple Arrows. This is EXACTLY what their whiteboard diagram looked like.]

And while it would be personally satisfying to be able to use Purple Arrows again, the recent decision by Apple to support only 64-bit apps in ongoing O/S releases leaves too many of my 32-bit legacy apps in the dust. So I’m stayin’ with 10.9.5! Hallelujah! Amen!

APFS makes FAKE copies ????

That’s just… STUPID !!!

when I copy a file I am protecting it from some corruption of a sector making it unreadable. But under APFS I could make 100 copies and only have ONE physical location on the disk.

Unbelievably stupid.

Apple is brain dead.

I’ve never heard of someone making a duplicate of a file on the same HD/partition JUST to protect it from the possible corruption of a sector. That’s an unbelievably stupid and horribly inefficient practice of backing up your data. Any rational person who’s worried about data corruption would copy their data to another HD. And anyone who’s extremely paranoid about it, would make a copy onto a third HD and store it at a remote location.

I have (and do) make a duplicate of a file when I want to “archive” its current state, so that I can continue to modify the original. But, as soon as I make a modification, the entire file (all the data) gets copied.

And by the way, my external HDD (RAID) is APFS, however it is only used for backing up files, so the above issue with copy-on-write never affects it, as nothing is ever copied and nothing is ever modified on it.

‘Unbelievably stupid’ is a fool who replies in a knee-jerk fashion to someone with a legitimate concern over the dumb AFPS ‘virtual copy’ protocol. There are plenty of extremely good reasons for making ‘real’ copies of files on the same HD. That you cannot think of one simply underlines your lack of vision.

Enlighten us, then: how can a duplicate file on your hard disk act as a guarantee against unexpected losses?

Nothing guarantees against losses. But many people make duplicate versions of files to have an original to go back to in case of human error. In addition drives don’t always fail catastrophically.. Sometimes problem occur and manifest only as read or write errors in a specific location. If you have two real copies it is unlikely both would be unrecoverable. Yes DUH backup.. Backup doesn’t happen all the time. There’s always some real gap between content creation and backup.

Just wanted to say thanks. Fully talked me out of converting my 2011 Mac mini to APFS.

Perhaps an MP4 movie file is not the best example. In most use cases an mp4 video will not have transitions written to the file, rather usually an editing app will render a new movie to a file.

A better example would be a Photoshop, Illustrator, and even InDesign files. PSD (Photoshop) files can be huge and also copied to save points in time or to be a template. This would be more real world in my opinion.

The other thing that was interesting is that “COW” Copy-on-Write is a bit of a misnomer here, since what is really occurring is “ROW”, redirect on write. Writes to the extent of the original file are redirected to a new extent. Don’t take my word for it though, Google it… I did! :]

Tim,

Sadly I’ve come to a slightly different conclusion why you don’t want to use it.

The real issue is the limits of SATA I/O queuing. This effects both SATA connected HDD’s & SSD’s!

Wow! Very illuminating! Thanks, Tim!

Funny, I saw the first comment and it said “Incredibly clear.” I had to laugh because I couldn’t follow past the definition of an offset. So, no, I don’t know much about this but I did just have a run-in with APFS and this article raises a question for me: I just recently upgraded to High Sierra and discovered my SSD was automatically reformatted to APFS, so I’m confused as you seem to imply one has a choice not to. I was under the impression that that is the format HS operates within; that one had no choice. Could you elucidate?

If you have an SSD, you don’t have a choice. Your drive will automatically be converted to APFS.

Not entirely true. While this does happen automatically, you can get around it if you choose. Here’s some good info: osxdaily.com/2017/10/17/how-skip-apfs-macos-high-sierra/

Incredibly clear. Thank you.

If there’s an easy way to force a real copy, that’s the obvious “way out”. Hopefully it’s easier than copying to a second drive and back.

If you use the cp command in a Terminal window, it will force a real copy rather than a copy on write. I am investigating other mechanisms as well.

Have you had any luck working out ways to copy files that disabled or bypasses copy on write? The reason I ask is I have noticed really odd performance degradation when using Parallels virtual machines when copy on write gets used.

Initially I thought this was related to the fact that when I do backups, I usually shut down my VM and take a clone of the entire thing over to an external hard disk from the internal SSD. Since this takes a while, most of the time I would just copy the file to another directory which is blazing fast on the SSD drive, then do the copy to the back disk while I went back to work in the VM.

However with High Sierra I started seeing crazy disk performance problems in my VM’s after doing this, because the copy I was copying to the external HDD was a copy on write clone (so not really a copy) and then running parallels on it, it created a lot of overhead.

Once I realized what was going on, I copied my VM in full to an external HDD, then deleted it, and copy it back again and voila! Performance problems vanished.

However now I am seeing some issues again after having been using parallels for a long time on High Sierra but this is after I stopped making local copies. I am gonna try cloning to the external disk again and copy it back to see if that fixes it.

But ideally we need to find a way to make a copy without copy on write, to ‘flush’ the performance issues.

Or even better, we need a way for really large files to have copy on write completely disabled! Parallels does not need it.

Is this why my hard disk performance seems to have gone down since I “upgraded” to High Sierra?

It will only affect your HDD speed if you have converted your volume to APFS or have erased it and created a new APFS volume. If not, some other part of the upgrade is responsible for your enhanced performance.

Have been waiting years for a new MAC PRO.

Would appreciate being advised as soon as one becomes available.

thank you.

glovideo

Have you reported the issue via Apple Bug Reporter?

http://bugreport.apple.com

It’s not a bug. This is the way a COW file system works.

I added a feature request to Apple’s bug reporting website requesting a way to disable copy on write. I think this would make APFS usable on HDDS.