Apple has officially announced that it will be transitioning from the long-toothed Intel silicon to Apple silicon moving forward. What does this mean? How will it affect pros, consumers, and everyone in-between? Keep reading to get answers to all the above.

But first, some background and techy stuff, the different instruction sets, and most importantly, the X86 vs. Apple silicon architecture.

Instruction Set

A defined set of parameters in which features and constraints are defined, such as IO, memory, physical requirements, data rates, and more. This is fundamentally important since it’s the “walls” in which everything from hardware to software needs to fit to work correctly.

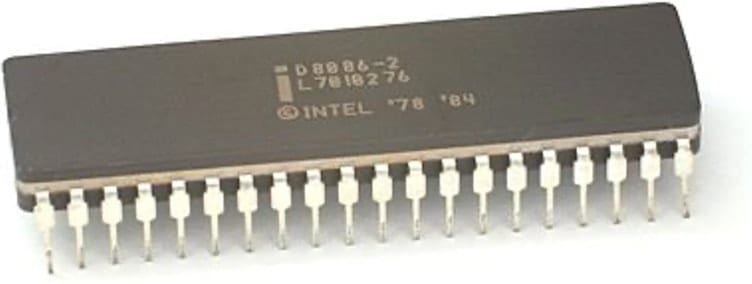

x86

The framework for CPUs from Intel to AMD, IBM, NEC, TI, STM, Fujitsu, OKI, Siemens, Cyrix, Intersil, C&T, NexGen, UMC, and DM&P since the mid-1970s. If you have had a PC in the last 20 years, chances are it’s been x86 based.

|  |

ARM

Originally Acorn RISC, the RISC framework concentrates on reducing complexity and overall transistor count to accomplish the same task as in CISC-based systems such as x86. It’s this reason that it has had a long pedigree in the industrial and embedded market, in both SoC and SoM solutions.

|  |

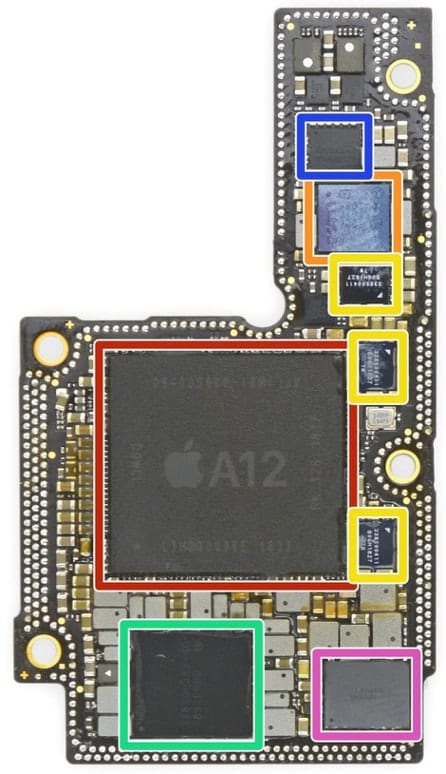

SoC (System on Chip)

A means of combining all hardware resources within a single silicon card or card assembly, instead of physically connecting separate hardware to a baseboard via PCIe, SATA, or other interconnect standards. If you’re reading this on a tablet, smartphone, or streaming device, you already own an SoC. But why would Apple want to use an architecture historically used in smartphones, tablets, and smart thermostats? It’s not one reason, as you will read below.

|  |

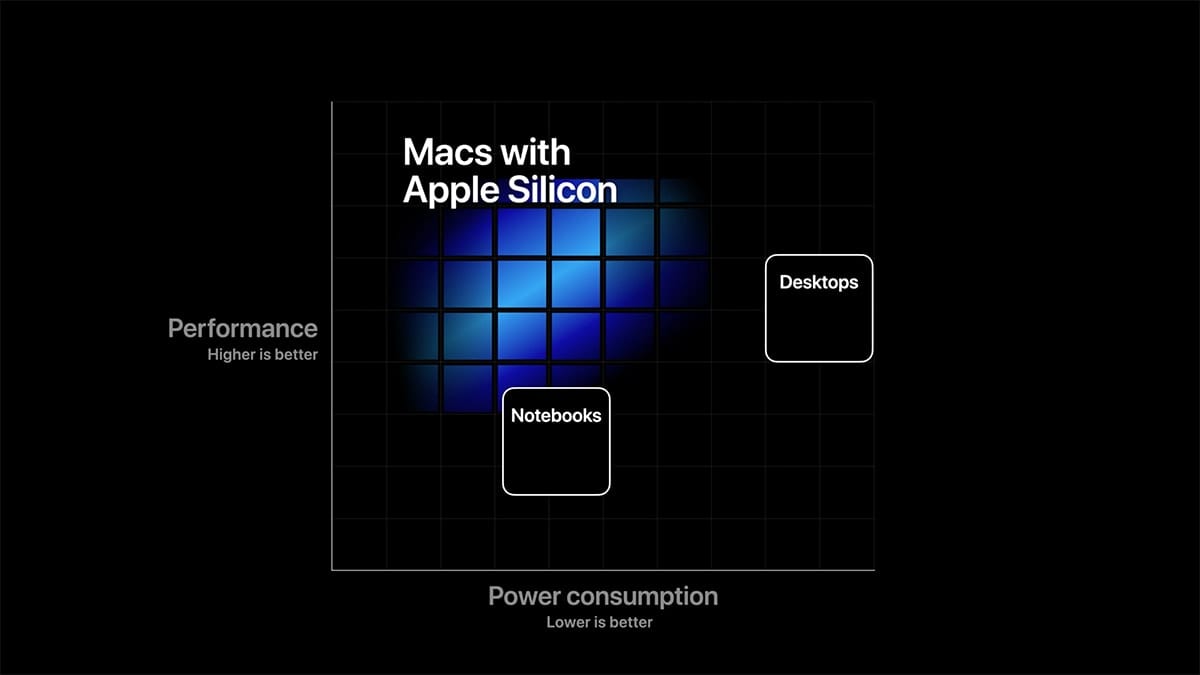

Some inherent benefits to System on Chip architecture (not specifically Apple silicon.)

- Smaller package for the same given functionality

- More throughput = more performance

- Lower production costs

- Lower per-unit costs

- Less complexity = lower failure rate

- less thermal waste = less heat

- Drastically lower power consumption (eco-friendly)

- less latency means potentially better audio/video workflow

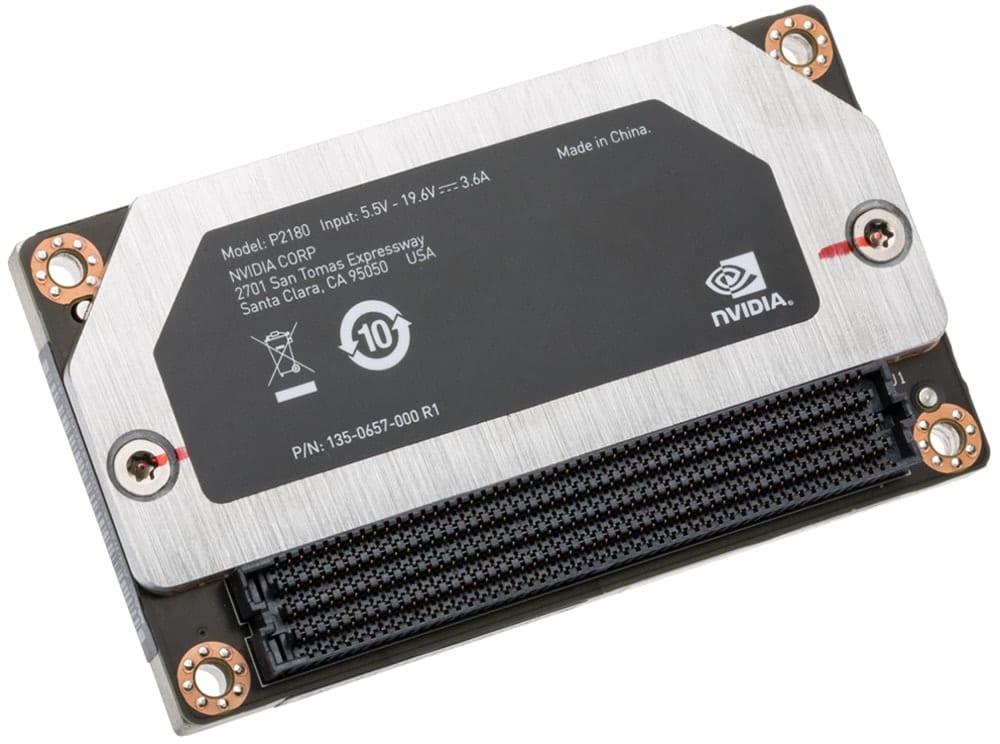

SoM (System on Module)

An application-specific computing device designed to offer some feature, or features to an existing architecture. An SoM can be as simple as a mezzanine card, or as exotic as an Apple Afterburner, which can offer massive hardware acceleration and take load away from the CPU to take care of other tasks. Tight integration between PCIe, Compute, and other subsystem resources will allow developers to produce custom Cards, modules, and IO that will dramatically enhance the value and performance of hardware for years to come.

|  |

What’s Next?

Now that all sounds swell, but what does that all mean in the real world? Well, simply put, the future of technology is changing, the market is changing, consumers are changing, people care less and less about the hardware and more and more about what they can do with it. Apple understands that the future of technology is not about specs; it’s about having the most integrated overall solution for the job.

It’s this reason among many that Apple has adopted a more modular architecture. Using the ARM architecture will allow for leaps and bounds better hardware integration. Apple no longer has to wait for the other kids in the sandbox to play nice. It has put its silicon down and drawn a line in the sand – pardon my shameless pun.

Apple Development System

What is available to developers (Apple Developers kit) is effectively a tablet SoC in a Mac mini chassis. This will allow developers to start building tools and software around this new architecture.

For now, anybody on the x86 train will get continued support using the Rosetta Translation Environment. This means that even after developers stop building code for x86, it will still have a growing compatibility layer brought to you by Apple. Developers who have jumped on board will be able to start making changes for the future.

Apple has learned from its past mistakes when it comes to leaving the Pro market. Do I need to bring up the trashcan, or 2019 Mac Pro?

But there is light at the end of the tunnel. Apple is back to doing what Apple does best, setting a trend that others will follow. To put it bluntly, we won’t see huge performance gains for a few years as developers get on board with Apple silicon and, more importantly, its new architecture. My head starts spinning at the possibility of what is to come next. A Mac mini with 128 cores? Modular computing on a stick? A Mac Pro with no PCIe limitations? Who knows, but the future looks brighter than ever – and the future is now.

From a individual consumer standpoint, the upside I see is this.

I should be able to purchase a MacBook Pro top line Or an iMac for a 4th tier price because the snob appeal will force price down.

That is my expected result anyway.

A Arm Mac Pro with PCIE4 or 5 and USB4. But that’s about two years

from now.

“– and the future is now.”

Well, maybe this Fall.

Did you ever hear of a company called Motorola? They made the chips for Apple before intel. Apple never used pentium chips only moving to Intel when the i core series became available. If the basic premise is false why should I believe the rest of the article

I think it would be nice to have applets from my iPhone also run on my mac. This is one step closer and a plus but I fear that apple is closing up on standardized protocols and interfaces. Soon we will have little from outside and be forced to live in a Apple bubble should we choose without much in the way of options. I hope Apple chooses to support both Arm and Intel moving forward as the 2 architect merge

All platforms are bubbles – Winter is a bubble, albeit currently the largest platform bubble.

The debate about RISC vs. CISC processors has raged for at least 40 years. The last major company to hang its hat on the RISC bandwagon was Sun Microsystems, whose SPARC processors reached their peak in the 1990s. Now, it appears Apple is taking the same step with ARM. Does Apple’s dominant position in the market (Macs being far more popular than Sun products ever were) mean that nothing can go wrong … go wrong?

FIRST LAW OF STRATEGY(business or war): No plan of engagement survives contact. If it does, it’s a trap. Or, to put it succinctly, anything that can go wrong, will—or already has.

Apple had a dozen years of running on IBM/Motorola/Apple (AIM) 6xx RISC chips in the 1990s. They made a big deal about it when they switched from Motorola’s 68k chips which powered the early Apple computers.

Will the new ARM-based Macs virtualize (not just emulate, which is slower and trouble-prone!) Windows using VMware Fusion or Parallels Desktop?

Will the new ARM-based Macs run native Windows using Boot Camp in the future?

I mean, could such two things be technically possible in the future? I think that it all depends on Microsoft releasing a true Windows 64-bit native for ARM. I think that the current version is just 32-bit.

On the other hand, I think that the main real reasons for Apple to move from Intel x86 to ARM-based Macs is not perfornance per watt, battery life, power, etc, but simply to unify their platforms and gadgets, save money and design and control everything. A huge saving for them. Note that the Mac represents a small percentage of Mac gadgets, after all (much more revenue from iPhone, for instance, and similar from iPad).

Microsoft does have a 64-bit ARM version of Windows (ARM64). It can run ARM64 apps natively, but can also run ARM32 and x86 (32-bit) Intel apps. Drivers however, need to be in the ARM64 format, meaning if a vendor does not have such a driver, the device will either not function or function with the base capabilities within its boot ROM (such as a graphics card only displaying at native resolution with no acceleration like they do on the Mac side if drivers aren’t loaded).

Thanks. Then, what is missing for Microsoft ARM64 is to run x86 (64-bit) Intel apps? For instance, to run Office for Intel (64-bit)?

The only way you can run x86 code on an Apple silicon Mac will probably be through emulation/transcoding on a x86 hypervisor. This could be done through instruction interpretation or by intercepting the Windows segment loader and transcoding x86 to AArch64 code there.

Parallels is being smugly quiet about their future plans.

You really don’t want to run Windows on ARM in a native hypervisor – there’s really no compelling Windows ARM software that you’d want to run. Any ARM code a Windows transcoder would create would probably be for a Qualcomm SQ1 which would not necessarily work on an Apple silicon system.

Apple’s been building their own silicon for over a decade, and I believe one of the first modifications they made to standard ARM processors was to alter the vector instruction parameter list to be more like Intel’s.

Additionally, Apple long ago wrote 32 bit instructions out of iOS and removed ARMv7 logic from their CPUs. Lord knows if a Microsoft Intel to ARM transcoder would emit code counting on 32 bit mode instructions being present.

More sunshine from Apple. But what Apple did best, and better than anyone else, was put the users, ALL the users, in control. That is not going to happen. This is all great—but they are counting on the “gotta have the newest, ‘best,’ thing from Apple, no matter what the cost.” And as long as enough people can afford it, this snob appeal will keep Apple afloat. Yes, it may be better technology, but if all it does is add more golly-gee-whiz-bang entertainment and eye candy, it’s no good at all.

In my shop, we don’t look at new new Pro level desktops un tell 5 to 6

years. The controlling thing is the apps like Adobe and Avid and Cinema 4D. Pro is not eye candy, it’s work.

Apple has never been know as putting users in control, quite the opposite is what they’re known for

Now, that is increasingly true. The first Apple computer I used was a Mac Plus. Since the advent of OSX, Apple has become increasingly controlling. Before OSX, programmers hated Apple, because Apple required them to follow Apple’s rules, which lead to a robust OS and less kludge applications. Since OSX, they have extended their control to the users—and far too many users will simply swallow whatever Apple chooses to feed them.

You say ARM will perform better, but you offer no examples, possibly because there are none. Apple is taking a leap into the unknown. Whether it’s the future or not remains to be seen. How well will they execute their new chip architecture? Microsoft flopped with it a decade ago. A return to Rosetta will certainly help with the transition, something missing in Catalina. The best way to run older Mac systems in Catalina is with Parallels or VMWare Fusion. I guess if you can afford a new Mac (and all the dongles needed to use it with existing hardware) you can theoretically afford to buy Parallels (and learn how to use it). Sorry, but I remain to be convinced ARM is a good place to run OS X. Of course, computers are so powerful now that running apps in Rosetta won’t overload most systems.

I’m pretty sure Apple has been secretly testing OSX on ARM for a few years and they know very well what it’s performance will look like with proper optimization. Apple is also privy to Intels forward looking pipeline of technology and that’s where the straw broke the camels back. Intel is running into a wall with X86 and they know it. Intel itself has been trying to design a new architecture for the last 20 years; remember Itanium? Intel Itanium was a complete failure. Apple sees ARM has much more room to grow both in performance and efficiency. All Intel can do at this point is keep kicking the can down the road with X86 till they develop something new which at this point may not happen for another decade or so.

You need to What is out there now.

https://amperecomputing.com/altra/?gclid=EAIaIQobChMIzcfqx7a06gIVmo3ICh3MFQpREAAYASAAEgJfRPD_BwE

Here’s an article from Anandtech comparing high performance an actively cooled 7nm Lightning core (found in the A13 in iPhone 11) to competitive cores: https://www.anandtech.com/show/15875/apple-lays-out-plans-to-transition-macs-from-x86-to-apple-socs. This meters down-on-the-metal performance as opposed to Geekbench’s functional benchmarks which probably use SoC non-CPU IP blocks.

Note that Skylake 10900K has the edge in integer, and 10900K, Ryzen 3950X, and Cortex-X1 (already at 5nm) have the edge in floating point.

10900K is the new overclockable Core-i9 CPU with which has two additional cores attempting to compete with Ryzen. It’s clearly reached the limits of physics as Intel had to shave the top of the chip to expose enough surface area to cool the chip. It’s got a 125w TDP, but in real life can draw over 300w. It’s a 10th gen 14nm Intel CPU which (unlike its brethren) did not make it to 10nm, possibly because they needed more surface area.

The Mac SoCs will be built on the TSMC’s 5nm node which will yield additional speed and efficiency, but it will be yet another generation of Apple silicon and will benefit from the Apple silicon team’s annual optimization.

Last year, I believe the central focus of the silicon team was efficiency, although they vastly improved the speed of matrix multiplication and division *by some which are used in machine learning. They introduced hundreds of voltage domains in the SoC capable of shutting down parts of the chip not in use, and yet still managed to improve the speed of the high performance and high efficiency cores by 20% despite no shrink in mask size.

This year, I fully expect the A14’s Firestorm cores to match or exceed 10900K’s performance core for core, although we don’t know if A14 will include SMT which can nearly double the multicore performance for any given core count.

Keep in mind that since Apple will design the Mac SoCs, they can include any number of high performance Firestorm, high efficiency Icestorm, neural engine, and graphics cores as they deem necessary. They won’t have to pick which Intel part to use. They can also include any IP blocks which currently exist for iPhone, as well as any other new IP blocks they want – say for PCIe lanes which I believe will be necessary for Thunderbolt support as well as later high performance machines like the Mac Pro.

The first Mac SoC is rumored to be a 12 core SoC with 8 Firestorm and 4 Icestorm cores.

Remember that the DTM showed at WWDC was cobbled together with an A12Z (form the iPad Pro) which is essentially an A12X with an additional graphics core, and that the A12 is a two year old design.

Uggh … here’s a copy with some typos fixed:

Here’s an article from Anandtech comparing high performance and actively cooled 7nm Lightning core (found in the A13 in iPhone 11) to competitive cores: https://www.anandtech.com/show/15875/apple-lays-out-plans-to-transition-macs-from-x86-to-apple-socs. This meters down-on-the-metal performance as opposed to Geekbench’s functional benchmarks which probably use non-CPU SoC IP blocks.

Note that Skylake 10900K has the edge in integer, and 10900K, Ryzen 3950X, and Cortex-X1 (already at 5nm) have the edge in floating point.

10900K is the new overclockable Intel Core-i9 CPU with which has two additional cores attempting to compete with Ryzen. It’s clearly reached the limits of physics as Intel had to shave the top of the chip to expose enough surface area to cool the chip. It’s got a 125w TDP, but in real life can draw over 300w. It’s a 10th gen 14nm Intel CPU which (unlike its brethren) did not make it to 10nm, possibly because they needed more surface area.

The Mac SoCs will be built on TSMC’s 5nm node which will yield additional speed and efficiency, but it will also benefit from another year of Apple silicon team’s optimization.

Last year, I believe the central focus of the silicon team was efficiency, although they vastly improved the speed of matrix multiplication and division (by some 6x) which are used in machine learning. They introduced hundreds of voltage domains in the SoC capable of shutting down parts of the chip not in use, and yet still managed to improve the speed of the high performance and high efficiency cores by 20% (despite no shrink in mask size).

This year, I fully expect the A14’s Firestorm cores to match or exceed 10900K’s performance core for core, although we don’t know if A14 will include SMT which can nearly double the multicore performance for any given core count.

Keep in mind that since Apple will design the Mac SoCs, they can include any number of high performance Firestorm, high efficiency Icestorm, neural engine, and graphics cores as they deem fit. They won’t have to pick which Intel part to use. They can also include any IP blocks which currently exist for iPhone, as well as any other new IP blocks they want – say for PCIe lanes which I believe will be necessary for Thunderbolt support as well as later high performance machines like the Mac Pro.

The first Mac SoC is rumored to be a 12 core SoC with 8 Firestorm and 4 Icestorm cores – probably to be used in some sort of laptop.

Remember that the DTM showed at WWDC was cobbled together with an A12Z (from the iPad Pro) which is essentially an A12X with an additional graphics core, and that the A12 is a two year old design. I believe they used this SoC because it has four Vortex high performance cores vs. every recent iPhone design’s two.